Artificial Intelligence Technology

and Systems (AITS) Laboratory

Technical areas: Machine learning, AI, deep learning, hardware accelerators, hardware introspection, self-aware systems, secure and private ML, ML systems, sparse neural networks, secure ML accelerators.

Current Projects

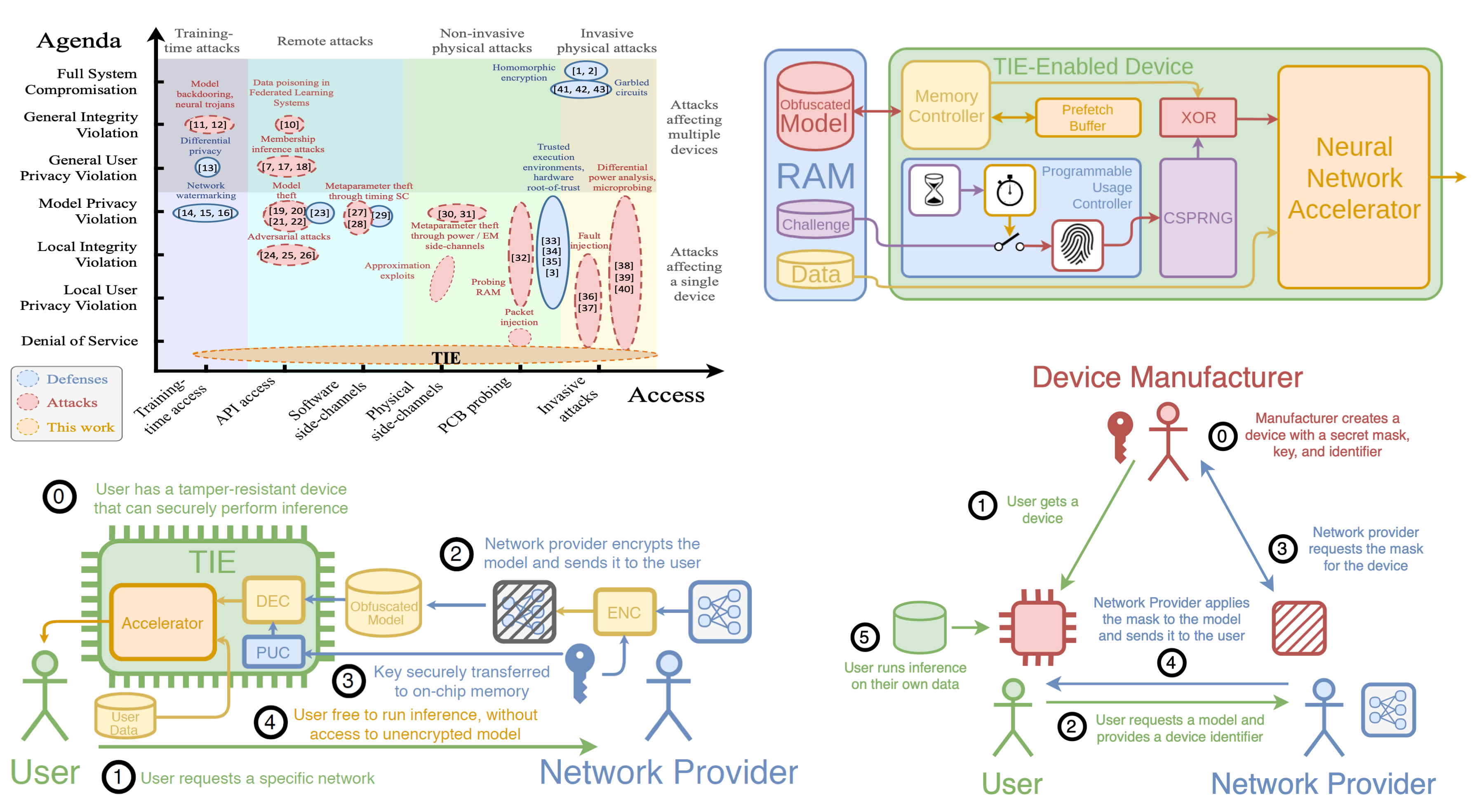

The team is developing algorithms, protocols and hardware solutions that allow designers to securely distribute their models without the risk of exfiltration.

Trustworthy And Privacy-Preserving Machine Learning Systems

Companies, in their push to incorporate artificial intelligence – in particular, machine learning – into their Internet of Things (IoT), system-on-chip (SoC), and automotive applications, will have to address a number of design challenges related to the secure deployment of artificial intelligence learning models and techniques.

Securing Execution of Neural Network Models on Edge Devices

Neural network model deployment in the cloud may not be feasible or effective in many cases. If the application requires near instantaneous inference and cannot tolerate the round trip latency associated with calls to a remote cloud server, then edge computation is often the only viable solution. The models are often trained using private datasets that are very expensive to collect, or highly sensitive, using large amounts of computing power. They are commonly exposed either through online APIs, or used in hardware devices deployed in the field or given to the end users. This gives incentives to adversaries to attempt to steal these ML models as a proxy for gathering datasets.

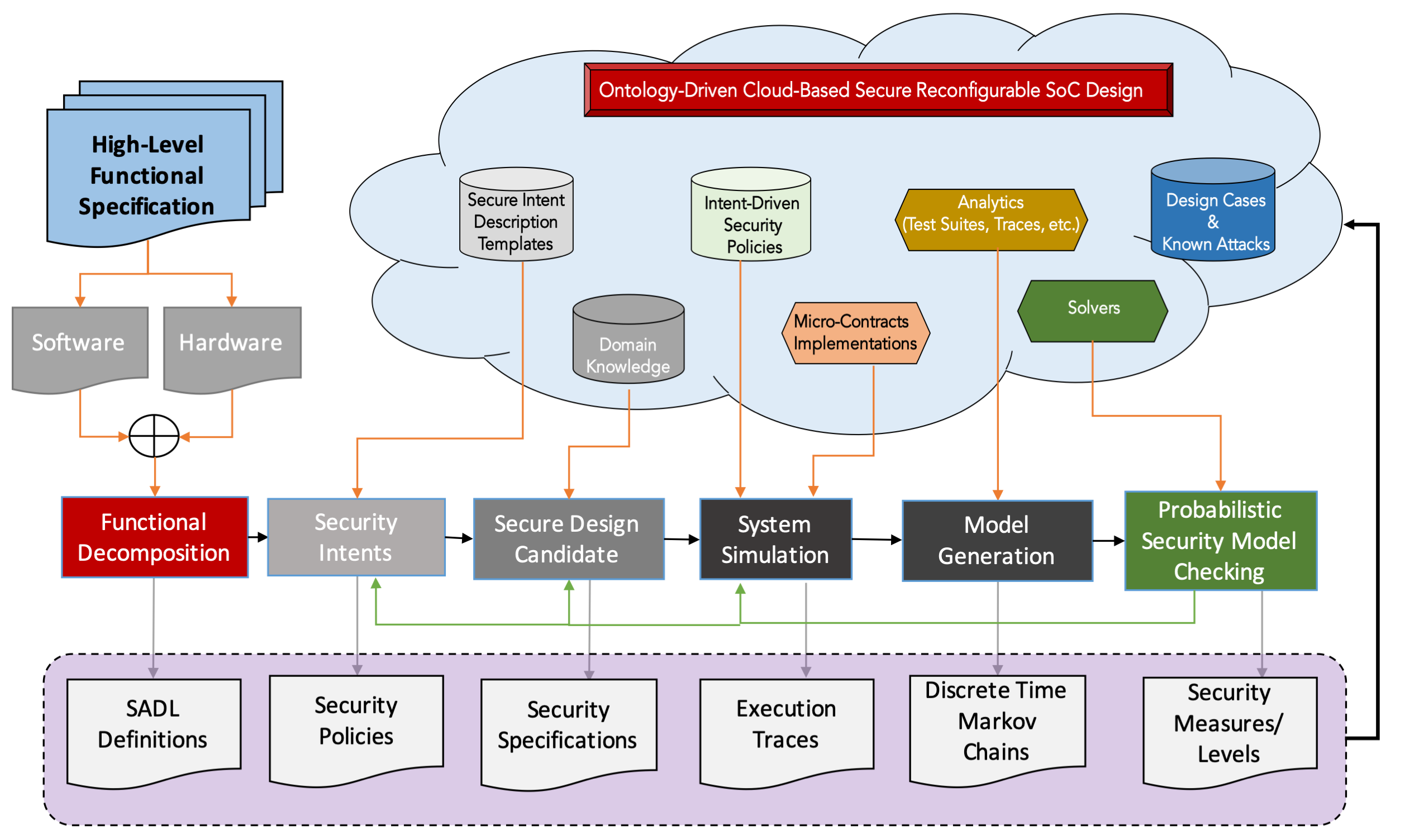

SODA: Security Intent-Aware Ontology-Driven Architecture Design Platform

With the ever-increasing complexity of current SoCs, ad-hoc or independent security policy definitions or implementations have become unworkable. Considering security policies in isolation undoubtedly leads to lower risk assessment coverage, side-effects and composition inconsistencies.

The notions of security and secure system design are highly contextual. Therefore, their specification, interpretation, implementation and evaluation need to also be context based. Under this research effort, we use (1) user or designer defined security intents, (2) crowd-sourced or curated aggregated domain knowledge and expertise, and (3) machine learning enabled automatic security policy implementation composition to drive next-generation secure reconfigurable SoC design flow.

Publications

- M. Isakov, M. Currier, E. del Rosario, S. Madireddy, P. Balaprakash, P. H. Carns, R. Ross, G. K. Lockwood, and M. A. Kinsy: “A Taxonomy of Error Sources in HPC I/O Machine Learning Models”, In the International Conference for High Performance Computing, Networking, Storage, and Analysis (SC), 2022.

- G. Dessouky, M. Isakov, M. A. Kinsy, P. Mahmoody, Miguel Mark, A. Sadeghi, E. Stapf, and S. Zeitouni: “Distributed Memory Guard: Enabling Secure Enclave Computing in NoC-based Architectures”, In the 58th ACM/EDAC/IEEE Design Automation Conference (DAC), 2021.